Bedankt voor het vertrouwen het afgelopen jaar! Om jou te bedanken bieden we GRATIS verzending (in België) aan op alles gedurende de hele maand januari.

- Afhalen na 1 uur in een winkel met voorraad

- In januari gratis thuislevering in België

- Ruim aanbod met 7 miljoen producten

Bedankt voor het vertrouwen het afgelopen jaar! Om jou te bedanken bieden we GRATIS verzending (in België) aan op alles gedurende de hele maand januari.

- Afhalen na 1 uur in een winkel met voorraad

- In januari gratis thuislevering in België

- Ruim aanbod met 7 miljoen producten

Zoeken

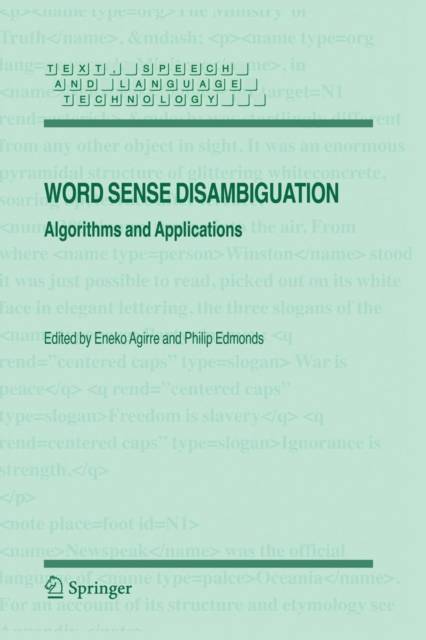

Word Sense Disambiguation

Algorithms and Applications

€ 76,95

+ 153 punten

Omschrijving

Graeme Hirst University of Toronto Of the many kinds of ambiguity in language, the two that have received the most attention in computational linguistics are those of word senses and those of syntactic structure, and the reasons for this are clear: these ambiguities are overt, their resolution is seemingly essential for any prac- cal application, and they seem to require a wide variety of methods and knowledge-sources with no pattern apparent in what any particular - stance requires. Right at the birth of artificial intelligence, in his 1950 paper "Computing machinery and intelligence", Alan Turing saw the ability to understand language as an essential test of intelligence, and an essential test of l- guage understanding was an ability to disambiguate; his example involved deciding between the generic and specific readings of the phrase a winter's day. The first generations of AI researchers found it easy to construct - amples of ambiguities whose resolution seemed to require vast knowledge and deep understanding of the world and complex inference on this kno- edge; for example, Pharmacists dispense with accuracy. The disambig- tion problem was, in a way, nothing less than the artificial intelligence problem itself. No use was seen for a disambiguation method that was less than 100% perfect; either it worked or it didn't. Lexical resources, such as they were, were considered secondary to non-linguistic common-sense knowledge of the world.

Specificaties

Betrokkenen

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 366

- Taal:

- Engels

- Reeks:

- Reeksnummer:

- nr. 33

Eigenschappen

- Productcode (EAN):

- 9781402068706

- Verschijningsdatum:

- 5/11/2007

- Uitvoering:

- Paperback

- Formaat:

- Trade paperback (VS)

- Afmetingen:

- 156 mm x 234 mm

- Gewicht:

- 548 g

Alleen bij Standaard Boekhandel

+ 153 punten op je klantenkaart van Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.