- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Zoeken

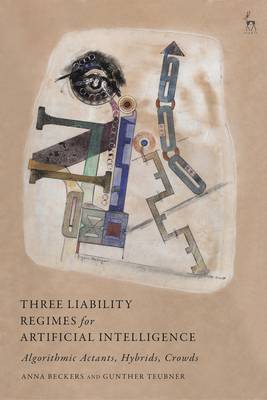

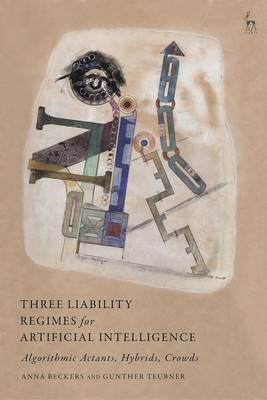

Three Liability Regimes for Artificial Intelligence

Algorithmic Actants, Hybrids, Crowds

Anna Beckers, Gunther Teubner

Paperback | Engels

€ 99,95

+ 199 punten

Omschrijving

This book proposes three liability regimes to combat the wide responsibility gaps caused by AI systems - vicarious liability for autonomous software agents (actants); enterprise liability for inseparable human-AI interactions (hybrids); and collective fund liability for interconnected AI systems (crowds).

Based on information technology studies, the book first develops a threefold typology that distinguishes individual, hybrid and collective machine behaviour. A subsequent social science analysis specifies the socio-digital institutions related to this threefold typology. Then it determines the social risks that emerge when algorithms operate within these institutions. Actants raise the risk of digital autonomy, hybrids the risk of double contingency in human-algorithm encounters, crowds the risk of opaque interconnections. The book demonstrates that the law needs to respond to these specific risks, by recognising personified algorithms as vicarious agents, human-machine associations as collective enterprises, and interconnected systems as risk pools - and by developing corresponding liability rules. The book relies on a unique combination of information technology studies, sociological institution and risk analysis, and comparative law. This approach uncovers recursive relations between types of machine behaviour, emergent socio-digital institutions, their concomitant risks, legal conditions of liability rules, and ascription of legal status to the algorithms involved.Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 240

- Taal:

- Engels

Eigenschappen

- Productcode (EAN):

- 9781509949373

- Verschijningsdatum:

- 15/06/2023

- Uitvoering:

- Paperback

- Formaat:

- Trade paperback (VS)

- Afmetingen:

- 156 mm x 234 mm

- Gewicht:

- 290 g

Alleen bij Standaard Boekhandel

+ 199 punten op je klantenkaart van Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.