- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

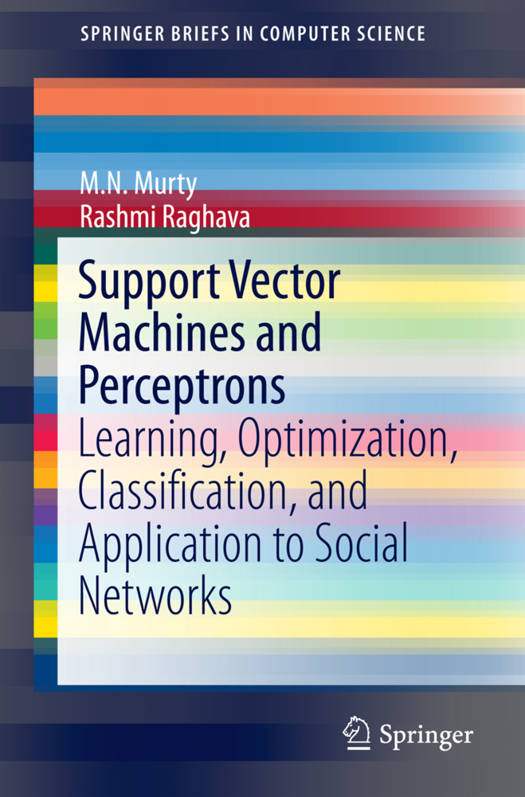

Support Vector Machines and Perceptrons

Learning, Optimization, Classification, and Application to Social Networks

M N Murty, Rashmi RaghavaOmschrijving

This work reviews the state of the art in SVM and perceptron classifiers. A Support Vector Machine (SVM) is easily the most popular tool for dealing with a variety of machine-learning tasks, including classification. SVMs are associated with maximizing the margin between two classes. The concerned optimization problem is a convex optimization guaranteeing a globally optimal solution. The weight vector associated with SVM is obtained by a linear combination of some of the boundary and noisy vectors. Further, when the data are not linearly separable, tuning the coefficient of the regularization term becomes crucial. Even though SVMs have popularized the kernel trick, in most of the practical applications that are high-dimensional, linear SVMs are popularly used. The text examines applications to social and information networks. The work also discusses another popular linear classifier, the perceptron, and compares its performance with that of the SVM in different application areas.>

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 95

- Taal:

- Engels

- Reeks:

Eigenschappen

- Productcode (EAN):

- 9783319410623

- Verschijningsdatum:

- 25/08/2016

- Uitvoering:

- Paperback

- Formaat:

- Trade paperback (VS)

- Afmetingen:

- 156 mm x 234 mm

- Gewicht:

- 167 g

Alleen bij Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.