- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Omschrijving

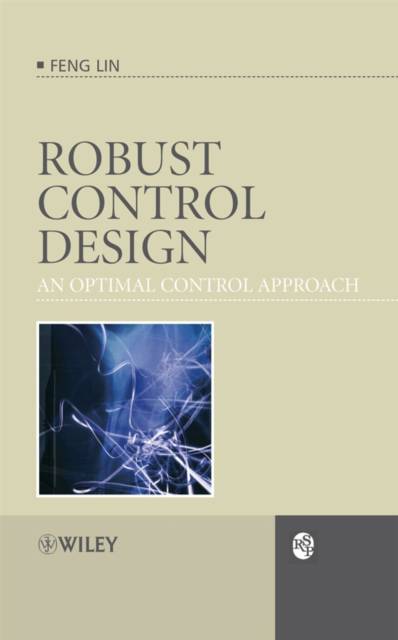

Optimal control is a mathematical field that is concerned with control policies that can be deduced using optimization algorithms. The optimal control approach to robust control design differs from conventional direct approaches to robust control that are more commonly discussed by firstly translating the robust control problem into its optimal control counterpart, and then solving the optimal control problem.

Robust Control Design: An Optimal Control Approach offers a complete presentation of this approach to robust control design, presenting modern control theory in an concise manner. The other two major approaches to robust control design, the H_infinite approach and the Kharitonov approach, are also covered and described in the simplest terms possible, in order to provide a complete overview of the area. It includes up-to-date research, and offers both theoretical and practical applications that include flexible structures, robotics, and automotive and aircraft control.

Robust Control Design: An Optimal Control Approach will be of interest to those needing an introductory textbook on robust control theory, design and applications as well as graduate and postgraduate students involved in systems and control research. Practitioners will also find the applications presented useful when solving practical problems in the engineering field.

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 384

- Taal:

- Engels

- Reeks:

Eigenschappen

- Productcode (EAN):

- 9780470031919

- Verschijningsdatum:

- 11/09/2007

- Uitvoering:

- Hardcover

- Formaat:

- Genaaid

- Afmetingen:

- 164 mm x 233 mm

- Gewicht:

- 666 g

Alleen bij Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.