- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Omschrijving

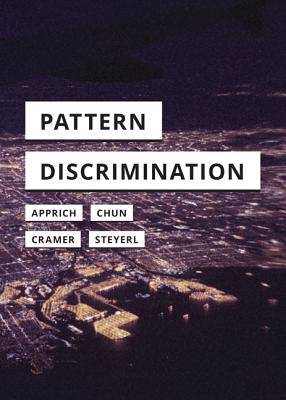

How do "human" prejudices reemerge in algorithmic cultures allegedly devised to be blind to them?

How do "human" prejudices reemerge in algorithmic cultures allegedly devised to be blind to them? To answer this question, this book investigates a fundamental axiom in computer science: pattern discrimination. By imposing identity on input data, in order to filter--that is, to discriminate--signals from noise, patterns become a highly political issue. Algorithmic identity politics reinstate old forms of social segregation, such as class, race, and gender, through defaults and paradigmatic assumptions about the homophilic nature of connection.

Instead of providing a more "objective" basis of decision making, machine-learning algorithms deepen bias and further inscribe inequality into media. Yet pattern discrimination is an essential part of human--and nonhuman--cognition. Bringing together media thinkers and artists from the United States and Germany, this volume asks the urgent questions: How can we discriminate without being discriminatory? How can we filter information out of data without reinserting racist, sexist, and classist beliefs? How can we queer homophilic tendencies within digital cultures?

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 144

- Taal:

- Engels

- Reeks:

Eigenschappen

- Productcode (EAN):

- 9781517906450

- Verschijningsdatum:

- 13/11/2018

- Uitvoering:

- Paperback

- Formaat:

- Trade paperback (VS)

- Afmetingen:

- 130 mm x 175 mm

- Gewicht:

- 158 g

Alleen bij Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.