- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Omschrijving

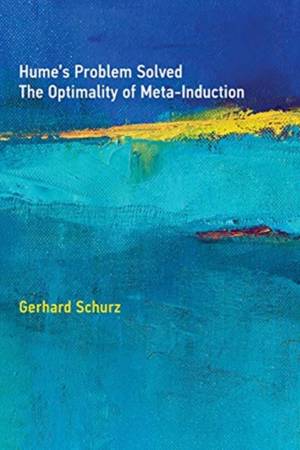

Hume's problem of justifying induction has been among epistemology's greatest challenges for centuries. In this book, Gerhard Schurz proposes a new approach to Hume's problem. Acknowledging the force of Hume's arguments against the possibility of a noncircular justification of the reliability of induction, Schurz demonstrates instead the possibility of a noncircular justification of the optimality of induction, or, more precisely, of meta-induction (the application of induction to competing prediction models). Drawing on discoveries in computational learning theory, Schurz demonstrates that a regret-based learning strategy, attractivity-weighted meta-induction, is predictively optimal in all possible worlds among all prediction methods accessible to the epistemic agent. Moreover, the a priori justification of meta-induction generates a noncircular a posteriori justification of object induction. Taken together, these two results provide a noncircular solution to Hume's problem.

Schurz discusses the philosophical debate on the problem of induction, addressing all major attempts at a solution to Hume's problem and describing their shortcomings; presents a series of theorems, accompanied by a description of computer simulations illustrating the content of these theorems (with proofs presented in a mathematical appendix); and defends, refines, and applies core insights regarding the optimality of meta-induction, explaining applications in neighboring disciplines including forecasting sciences, cognitive science, social epistemology, and generalized evolution theory. Finally, Schurz generalizes the method of optimality-based justification to a new strategy of justification in epistemology, arguing that optimality justifications can avoid the problems of justificatory circularity and regress.

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 400

- Taal:

- Engels

- Reeks:

Eigenschappen

- Productcode (EAN):

- 9780262039727

- Verschijningsdatum:

- 7/05/2019

- Uitvoering:

- Hardcover

- Formaat:

- Genaaid

- Afmetingen:

- 152 mm x 231 mm

- Gewicht:

- 612 g

Alleen bij Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.