- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Zoeken

€ 67,95

+ 135 punten

Omschrijving

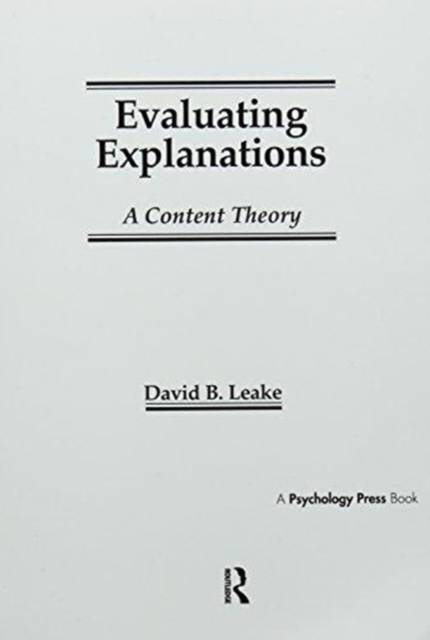

Psychology and philosophy have long studied the nature and role of explanation. More recently, artificial intelligence research has developed promising theories of how explanation facilitates learning and generalization. By using explanations to guide learning, explanation-based methods allow reliable learning of new concepts in complex situations, often from observing a single example.

The author of this volume, however, argues that explanation-based learning research has neglected key issues in explanation construction and evaluation. By examining the issues in the context of a story understanding system that explains novel events in news stories, the author shows that the standard assumptions do not apply to complex real-world domains. An alternative theory is presented, one that demonstrates that context -- involving both explainer beliefs and goals -- is crucial in deciding an explanation's goodness and that a theory of the possible contexts can be used to determine which explanations are appropriate. This important view is demonstrated with examples of the performance of ACCEPTER, a computer system for story understanding, anomaly detection, and explanation evaluation.Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 274

- Taal:

- Engels

- Reeks:

Eigenschappen

- Productcode (EAN):

- 9781138969162

- Verschijningsdatum:

- 2/09/2016

- Uitvoering:

- Paperback

- Formaat:

- Trade paperback (VS)

- Afmetingen:

- 152 mm x 229 mm

- Gewicht:

- 452 g

Alleen bij Standaard Boekhandel

+ 135 punten op je klantenkaart van Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.