- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Omschrijving

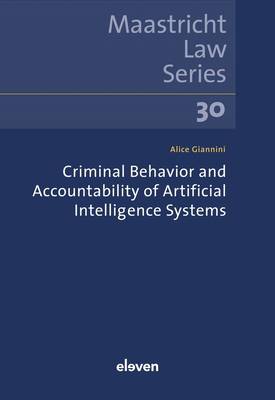

AI systems have the capacity to act in a way that can generally be considered as 'criminal' by society. Yet, it can be argued that they lack (criminal) agency - and the feeling of it. In the future, however, humans might develop expectations of norm-conforming behavior from machines. Criminal law might not be the right answer for AI-related harm, even though holding AI systems directly liable could be useful - to a certain extent. This book explores the issue of criminal responsibility of AI systems by focusing on whether such legal framework would be needed and feasible. It aims to understand how to deal with the (apparent) conflict between AI and the most classical notions of criminal law. The occurrence of AI is not the first time that criminal law theory has had to deal with new scientific developments. Nevertheless, the debate on criminal liability of AI systems is somewhat different: it is deeply introspective. In other words, discussing the liability of new artificial agents brings about pioneering perspectives on the liability of human agents. As such, this book poses questions that find their answers in one's own beliefs on what is human and what is not, and, ultimately, on what is right and what is wrong.

About the Maastricht Law Series: Created in 2018 by Boom Juridisch and Eleven International Publishing in association with the Maastricht University Faculty of Law, the Maastricht Law Series publishes books on comparative, European and International law. The series builds upon the tradition of excellence in research at the Maastricht Faculty of Law, its research centers and the Ius Commune Research School. The Maastricht Law Series is a peer reviewed book series that allows researchers an excellent opportunity to showcase their work.Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 285

- Taal:

- Engels

- Reeks:

Eigenschappen

- Productcode (EAN):

- 9789047301721

- Verschijningsdatum:

- 24/11/2023

- Uitvoering:

- Paperback

- Formaat:

- Trade paperback (VS)

- Afmetingen:

- 165 mm x 240 mm

- Gewicht:

- 485 g

Alleen bij Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.