- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Omschrijving

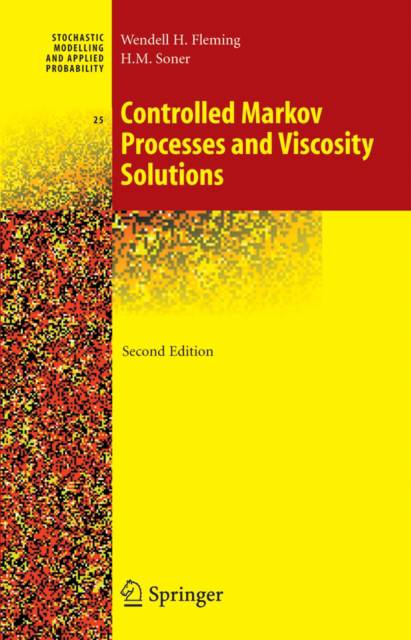

This book is an introduction to optimal stochastic control for continuous time Markov processes and the theory of viscosity solutions. The authors approach stochastic control problems by the method of dynamic programming. The text covers dynamic programming for deterministic optimal control problems, as well as to the corresponding theory of viscosity solutions. New chapters introduce the role of stochastic optimal control in portfolio optimization and in pricing derivatives in incomplete markets and two-controller, zero-sum differential games. Also covered are controlled Markov diffusions and viscosity solutions of Hamilton-Jacobi-Bellman equations. The authors use illustrative examples and selective material to connect stochastic control theory with other mathematical areas (e.g. large deviations theory) and with applications to engineering, physics, management, and finance.

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 429

- Taal:

- Engels

- Reeks:

- Reeksnummer:

- nr. 25

Eigenschappen

- Productcode (EAN):

- 9780387260457

- Verschijningsdatum:

- 17/11/2005

- Uitvoering:

- Hardcover

- Formaat:

- Genaaid

- Afmetingen:

- 161 mm x 242 mm

- Gewicht:

- 725 g

Alleen bij Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.