- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

- Afhalen na 1 uur in een winkel met voorraad

- Gratis thuislevering in België vanaf € 30

- Ruim aanbod met 7 miljoen producten

Zoeken

€ 32,95

+ 65 punten

Omschrijving

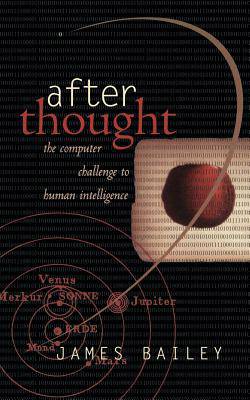

Through the first fifty years of the computer revolution, scientists have been trying to program electronic circuits to process information the same way humans do. Doing so has reassured us all that underlying every new computer capability, no matter how miraculously fast or complex, are human thought processes and logic. But cutting-edge computer scientists are coming to see that electronic circuits really are alien, that the difference between the human mind and computer capability is not merely one of degree (how fast), but of kind(how). The author suggests that computers "think" best when their "thoughts" are allowed to emerge from the interplay of millions of tiny operations all interacting with each other in parallel. Why then, if computers bring to the table such very different strengths and weaknesses, are we still trying to program them to think like humans? A work that ranges widely over the history of ideas from Galileo to Newton to Darwin yet is just as comfortable in the cutting-edge world of parallel processing that is at this very moment yielding a new form of intelligence, After Thought describes why the real computer age is just beginning.

Specificaties

Betrokkenen

- Auteur(s):

- Uitgeverij:

Inhoud

- Aantal bladzijden:

- 288

- Taal:

- Engels

Eigenschappen

- Productcode (EAN):

- 9780465007820

- Verschijningsdatum:

- 1/06/1997

- Uitvoering:

- Paperback

- Formaat:

- Trade paperback (VS)

- Afmetingen:

- 136 mm x 204 mm

- Gewicht:

- 312 g

Alleen bij Standaard Boekhandel

+ 65 punten op je klantenkaart van Standaard Boekhandel

Beoordelingen

We publiceren alleen reviews die voldoen aan de voorwaarden voor reviews. Bekijk onze voorwaarden voor reviews.